Engineering

The Engineering team works towards enabling technology and innovation pathways for the hospital. They focus on multi-disciplinary engineering and technology development.

On-going projects:

- AR – Artificial Reality

- Surgility

Links to other place for information of the program/team:

Major Accomplishments

Telemonitoring

Telemonitoring

Telemonitoring program started off as a single unit pilot in 2016 to evaluate the use of network video monitoring to monitor multiple patients remotely. The idea was to use monitoring technology to provide the same level of quality care while reducing the costs associated with constant bedside observation.

We’ve demonstrated a remote observation technician can effectively monitor up to 6 or 7 patients simultaneously. From a centralized location, the remote observation technician is able to watch and redirect patients to avoid falls and / or adverse events, and escalate to a nurse to help attend if redirection is unsuccessful.

Telemonitoring is now live across all UHN inpatient units and has progressively reduced fall rates each year. Telemonitoring’s also reduced inpatient waitlist mortality for lung transplant patients from 21% to 5%. During COVID, Telemonitoring helped increase L2 capacity on COVID inpatient units through the rapid expansion and integration with vital sign monitors. We’re now focused on expanding Telemonitoring services to long-term care centres and other external partner sites.

Organ Drone Transportation

Organ Drone Transportation

The Surgility program started in 2019 to better enable technology pathways and innovation across operating rooms and surgery care units.

Projects within the portfolio highlight diverse, innovative ideas from our clinical leads, and many ways in which engineering design and technology address gaps and challenges.

In September 2021, we worked with Unither Bioélectronique, Trillium Gift of Life Network, Ajmera Transplant Centre, and the Toronto Lung Transplant Program to complete the world first lung transplant delivered by drones. This effort will widen the availability and access of donor organs through an autonomous aerial transportation network. It will provide faster end-to-end delivery from hospital to hospital over traditional retrieval, which uses slow commercial or costly charter flights and has multiple waypoints between hospitals.

We helped scope site selections and preparation of takeoff and landing areas, operation process models and protocols for deployment of internal and external teams, design and build of take-off and landing platforms, and optimize access and flow between areas with Security and Facilities.

Mixed Reality Bennet Fracture Simulator

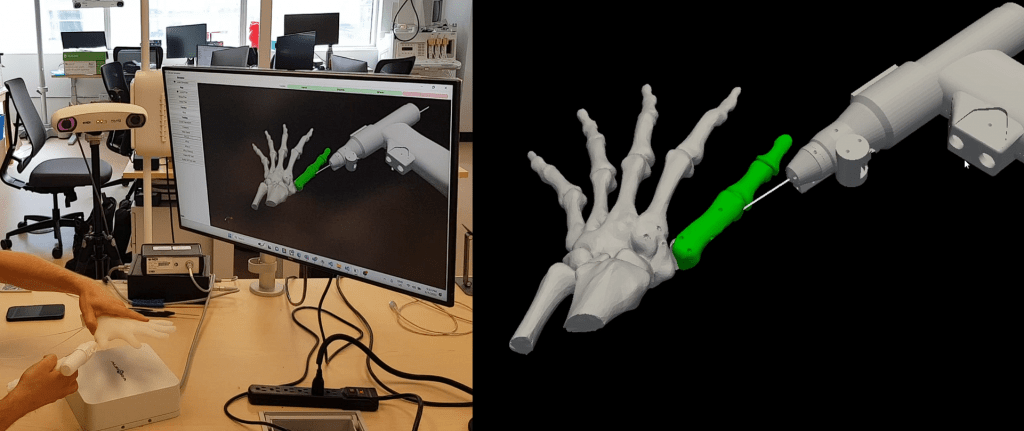

Working with Dr. Blake Murphy, Dr. Joseph Catapano, and Dr. Stefan Hofer, a Bennet fracture simulator was designed and developed to teach virtual k-wire placements for learners across different skill levels. The simulator combined a 3D printed and silicone casted physical model with electromagnetic navigation for real-time k-wire guidance and feedback. The simulator also allows for virtual oblique x-rays to evaluate k-wire placements in comparison to optimal target trajectories. The Bennet fracture simulator was awarded the Best Translational Research Award at the 38th Annual Division of Plastic, Reconstructive, and Aesthetic Surgery Research Symposium as well as the J.F. Murray Award for Excellence in Surgery of the Hand and the Innovation Award Second Prize at the 2023 Canadian Society of Plastic Surgery annual meeting.

PACDASH (Preadmission Clinic Dashboard)

PACDASH (Preadmission Clinic Dashboard)

With the onset of the pandemic, a better communication tool was needed for interdisciplinary teams to manage virtual visits and help triage more volume to help with surgical backlog. Working with Surgical Services and TGH / TWH pre-admission clinics, Techna Transformatics and Surgility teams helped design, develop, and implement a new dashboard tool to better provide visibility of appointment status across provider roles and enable team communication in a hybrid on-site and virtual environment. Project highlights were presented at the 2022 e-Health Conference in the Digital Innovation category.

The Surgility team manage and support printing requests for the new multi-material polyjet printer in Techna’s Image Guided Discovery Lab (IGDL) at the basement of Toronto General Hospital. The multi-material printer is able to print over a large build volume (350 x 350 x 200 mm) through jetting photopolymers droplets that are rapid UV cured. Multiple printing jet heads allow for materials of different properties to be cured during the printing process, including mixing colors and various shore values. The technology has helped facilitate high fidelity models used for training, planning for complex procedures, and various tooling to support other projects such as augmented reality, registration and tracking assemblies, and eye tracking.

In addition to housing the new polyjet printer, the IGDL space has continued as a 3D printing and rapid prototyping hub at TGH post pandemic 3D printing demands, providing an environment with resource contributions and knowledge sharing between our team and the Advanced Perioperative Imaging Lab (APIL) under Dr. Azad Mashari.

Patient Models and Tooling

The ability to combine multiple material properties in a single print on the polyjet has improved efficiency in fabricating high fidelity models involving multiple segmentations of anatomical and disease regions, which would otherwise require sequencing printing order for different segmentations or parallel printing across multiple printers. These include models for preoperative assessment of apical thoracic malignancies to help identify critical neurovascular structures, sarcoma models to better contextualize bone invasion and optimize, and use of physical models to communicate procedural details and context with patients and their families.

Functionality to print and mix color also enables adding image-based features to a 3D print, where textures can be mapped on the printed 3D surface, allowing computer vision and photogrammetry techniques to be utilized for 3D scanning, tracking, and registration. This pipeline helps support integration with other technologies and applications such as model and tool tracking across our augmented reality projects, and automated methods for quantifying suturing performance with Penrose phantoms used in the 2022 Thoracic Surgery Bootcamp.

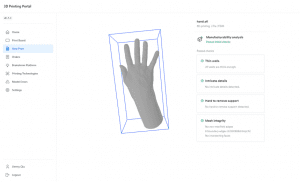

3D Printing Portal

The pandemic has also brought together multiple independently managed 3D printing resources and shops across UHN, including Surgility, Advanced Perioperative Imaging Lab, Princess Margaret Machine Shop, and the Guided Therapeutics Lab.

As pandemic production demands have ramped down, our engineering team has resumed work on the printing portal to combine independently managed 3D printing resources to facilitate requests and manage the end-to-end process. The development includes services for authentication and authorization, request submission, model upload and analysis, cost estimation, and centralized request pool, and printing parameter estimations based on machine learning.

Testing with print shop partners across service features and current cost modelling will commence over the fall, with the release of the portal and newsletter to the research community for end of the 2022 calendar year.

Figure 1. Thoracic tumor with critical structures fabricated on the polyjet printer.

Figure 2. 3D print of osteochondroma for pre-operative planning.

Figure 3. 3D printing portal web dashboard.

Figure 1. Cyberworks Robotics autonomous wheelchair prototype.

Moving patients around the hospital between floors with complex layouts has been an on-going problem. There is often a backlog of inpatients waiting to get imaging done since there are not enough staff available to support transportation of patients from inpatient units to the imaging department on-time for their scheduled scans. The Surgility team is working with Cyberworks Robotics to test the feasibility of an autonomous wheelchair system to independently transport a patient from an inpatient unit to the imaging department. The wheelchair system uses LIDAR technology and several depth-sensing cameras to map out the wheelchair’s surroundings, detect / move around obstacles, and identify the ideal path across locations.

The Surgility team has assisted with test runs of the system in the basement of TGH, troubleshooting hardware issues with the wheelchair components / electronics, providing consultation on how the system could integrate with hospital workflows and implementing safety measures. Some of the safety measures involved working with the original manufacturer of the electronic wheelchair to install a standard wheelchair seatbelt and testing emergency stop functionality. It was determined that a secondary touchscreen monitor would need to be installed so that an operator can shadow during testing and access the computer controls. The team worked with the original developers of the sensing / navigation technology to identify a compatible touchscreen monitor that can be purchased through a Canadian vendor. An optimal mounting solution was also identified so that the monitor would not interfere with existing hardware components, but still provide flexibility to collapse the system down for transport if needed.

Currently the team is assisting in the design and requirements of a small-scale feasibility study to test the system’s reliability and repeatability to transfer able bodied patients. The study will assess the technology with integrations between the wheelchairs and the Fleet Management Software, allowing staff to track the real-time location of the wheelchairs remotely. The team is working with Cyberworks Robotics to review technology readiness for trial, including reviews for code and data, and identifying key functionalities and features needed.

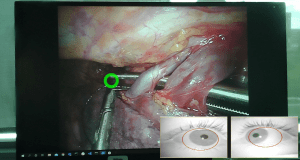

Applications in eye tracking in surgery may give insights to the cognitive process across different surgical tasks, surgeons with different levels of experience, and convey area of focus for visual attention to supplement communication. There’s a current lack of translation examples in literature and practice for eye tracking and use of gaze information in the surgical field. Specifically, understanding of benefits related to improved communication and education as demonstrated in other fields, and use of metrics indicative of cognitive load that can help better manage challenging tasks and safety. Furthermore, no vendors currently offer an integrated eye tracking hardware or software for fusion with video systems and display monitors.

The Surgility team has continued work with Dr. Kazuhiro Yasufuku and lead project collaborator Andrew Effat on developing and evaluating gaze tracking technologies for surgery. Three pipelines are being evaluated and advanced to translate the technology for use in environments for learning and the OR. These include an in-house fabricated headset, a commercial screen-based device, and a machine learning neural network model.

Headset

A standardized competitive analysis of commercially available headsets for technology readiness and opportunity was performed in the Image Guided Discovery Lab and ORs with participant groups consisting of engineers, residents, and fellows. For headsets (Tobii, Pupil Labs, Argus), factors evaluated include

- Cost

- Accuracy

- Comfort

- Application programming interface (API)

- Available data

Based on the evaluation, the Pupil Labs headset demonstrated the biggest advantage in innovation opportunity due to its open-source design allowing for customization and improvements along with its more accessible cost.

From the original Pupil Labs open-source design, the team refined an in-house monocular headset. With focus on improving gaze accuracy and comfort, three main iterations were produced.

- Added a second eye camera enabling binocular eye tracking and gaze triangulation

- Integrated with a novel gaze estimation algorithm that better compensate angular offsets

- Updated the calibration software for a simpler and more accurate calibration

- Added of adjustable behind and overhead straps to reduce risk of slippage and distribute weight

- Incorporated novel manufacturing techniques utilizing flexor joints to create flexible temple arms

- Modified to the nose bridge to accommodate comfort and wider variety of face shapes

Screen-Based

Data processing and performance was optimized GazePoint screen-based tracker through in lab and OR testing, with desktop monitor setups as well as OR video routing to sterile field monitors.

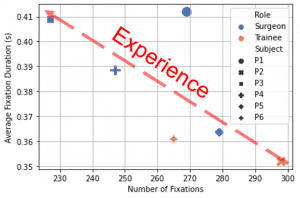

The GazePoint device has been used in both the 2022 and 2023 Thoracic Surgery Bootcamp assessing the role of eye tracking as a method to evaluate surgical experience. Initial data collected aligns with reported results found in other sectors, where lower experience levels correlate with more scattered fixation areas and shorter attention time across areas.

The team also supported the invention disclosure filing with Brian Bobechko and the Technology Development and Commercialization Office, with study design and data collection to test and refine the technology and processes.

Webcam Eye Tracker using Machine Learning

The team has implemented and continually improved upon a system that estimates the user’s point of regard on a computer monitor using a webcam mounted on top of the screen and a convolutional neural network model. The webcam recurrently captures images of the user’s eyes and passes the eye images through a deep convolutional neural network that has been trained to estimate the user’s gaze direction from the appearance of their eyes and the location of their head with respect to the camera.

The team has experimented with several different neural network architectures and datasets for training the model, including performant gaze estimation datasets in the literature as well as a custom dataset containing labelled eye images of the end-user. Ultimately, the end goal is to produce a robust gaze estimation pipeline for surgeons in the OR, effectively illustrating the surgeon’s real-time gaze on a monitor using an off-the-shelf webcam. Surgility will continue to benchmark these models and assess their efficacy in the OR.

Figure 1. New binocular eyetracking system: gaze overlay and tracked pupils.

Figure 2. In-house designed eye tracking headset.

Figure 3. Measurement collection with participant at the thoracic surgery bootcamp.

Figure 4. Correlation between experience level, number of fixations, and average fixation duration from observing laparoscopic video.

Photo Documentation for Cytoreduction Procedures

In advanced ovarian cancer, the most significant prognostic factor is achieving complete cytoreduction at the time of primary surgery. Standard operative reporting in ovarian cancer consists of listing the location of tumour seen at the beginning of surgery and then at the end of the procedure. However, reliance on written or dictated descriptions alone may not completely describe residual tumor burden due to varying detail and interpretation of the description.

At UHN, gynecologic oncology teams have started objective documentation of residual tumour with photos for cytoreductive surgery. Photo documentation provides visible light evidence of pre-operative and post-operative states of the procedure for case reporting. It also enables the opportunity to improve description of disease location and tumour burden which may improve survival as appropriate adjuvant therapy and novel targeted therapies can be given to patients with suboptimal cytoreduction. Prior to working with the Surgility team, a number of barriers existed for implementation of photo documentation into practice. These challenges include

- Management of case photos and sensitive data (patient and procedural info)

- Surgical fellows managed process across devices

- Manual process for photo retrieval, annotation, and distribution

- Lack of centralized archiving of photos for case reporting and review

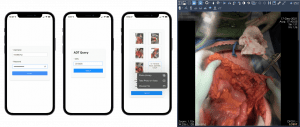

Working with Dr. Marcus Bernardini and gynecologic oncology fellow Dr. Sabrina Piedimonte, the Surgility team helped design and develop a photo upload and documentation application that can be used with existing iPhone devices used for photo capture during cytoreduction procedures. The application implements Security and Privacy recommendations and interfaces with a backend server for conversion of uploaded photos to DICOM for storage in PACS.

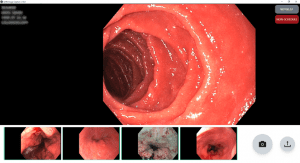

Endoscopy Image Capture

In preparation of EPIC go-live, the Surgility engineering team supported Synapse and Medical Engineering teams in modernizing legacy image capture applications and services across endoscopy and cystoscopy units. At the end of 2020, the UHN in-house legacy image capture framework was nearing 20 years old, with development support long abandoned. Furthermore, the capture framework only supported standard definition captures (up to 720 x 480 resolution). Legacy reliance on admission, discharge, and transfer (ADT) interfaces and workflows were also incompatible with EPIC and the Lumens endoscopy module to support imaging orders and embedded reports.

As part of EPIC Lumens integration, the Surgility team designed and developed a new EPIC compatible image capture application and supporting services. Features include

- EPIC Lumens compatible image capture application and supporting services

- High-definition image capture (1920 x 1080)

- Responsive interface supporting multiple workstation display ratios (4:3, 5:4, 16:9, 16:10) and resolutions (640 x 480 to 2560 x 1440)

- Compatibility with any USB video class (UVC) capture hardware

- Conversion to DICOM series and upload to JDMI PACS

- Interfacing with enterprise service bus and JDMI PACS according to EPIC and Lumens specifications

- Ability to load trained AI models

A major component of the successful EPIC integration was the implementation of HL7 services that receives imaging orders from Lumens and supplies the unique image identifiers after successful PACS upload. The orders interface automatically generates and updates the procedure worklist based on HL7 ORM messages received. Patient, physician, and procedural identifiers are automatically retrieved from the procedure worklist to map and fill corresponding DICOM tags, ensuring data quality during DICOM conversion and transfer for storage. Upon successful storage with JDMI PACS, corresponding HL7 MDM messages are sent back to EPIC, specifying each image in the uploaded series with a unique document key for image link. The document keys are then used by Lumens to query and retrieve images using the new image retrieval interface implemented by JDMI PACS team to support image embedding in reporting.

In alignment with future AI opportunities and enabling integration and translation pathways, the application also allows for loading of AI models to apply to real-time video data where model output is visualized as an overlay layer.

The new image capture application and supporting services is live across

- TGH and TWH Endoscopy and Cystoscopy Units

- Cardiovascular Intensive Care Unit

- Medical Surgical Intensive Care Unit

Figure 1. Cytoreduction case photo documentation on iPhone and DICOM conversion for PACS.

Figure 2. New image capture application for high-definition capture, conversion to DICOM, and integration with EPIC Lumens

OR Efficiency

Team members from both the Surgility and Techna Transformatics (Kelly Lane, Agata Misiura, Linda Eum) teams were engaged by Surgical Services to help apply LEAN methodologies to reduce backlog, analyze delay variables and contributing factors, and maintain high quality of care. All non-emergency procedures (scheduled procedures only) for the cardiac and otolaryngology services were chosen as areas of initial focus. The teams helped evaluate patient flow through the perioperative pathway to identify opportunities for flow optimization across bottlenecks, redundancies, and inefficiencies. Some key areas for focus were

- Improving first case start times

- Reduce turnover times between cases

- Reduce unplanned overtime hours in the Toronto General Hospital ORs

All roles were observed to help generate process models for assessment of tasks and refinement, including

- Surgeons (staff, fellows, residents)

- Anesthesia (staff, fellows, residents)

- Nursing

- Patient transport

- Environmental services

- Clerical staff

Patient and staff workflows were observed throughout the entire perioperative pathway including, the surgical admission unit (SAU), preoperative care unit (POCU), OR, and post anesthesia care unit (PACU). The team supported the work effort in historic data analysis of delay factors and documented times, findings and implementations at other centres, and application of LEAN tools to generate process models, flow of staff movement across procedure times, and process times across flow of tasks and information. Staff interviews and surveys were also conducted to provide additional insight and feedback. The teams compiled findings, analysis results, and recommendations for presentation to Surgical Services and leadership.

The engineering team also supported assessment of existing data quality and opportunities for improved procedure time estimates. Regression models were trained utilizing historic data and compared to ORSOS estimates of procedural times. Refined modelling demonstrated improvement in estimates across procedural time accuracy and spread.

Endoscopy Efficiency

Throughput of patients at Toronto General Hospital (TGH) and Toronto Western Hospital (TWH) Endoscopy Clinics has been drastically impacted by COVID-19 with both clinics not able to run at full capacity creating a backlog in patient procedures. To improve operational efficiency and reduce backlog, the Surgility and Techna Transformatics teams worked with TGH and TWH Endoscopy Clinics to optimize current processes and implement rapid improvement events using LEAN methodologies.

The areas of focus for this project included all areas and flow within the TGH and TWH Endoscopy Clinics, with specific focus on

- Procedure volumes

- First case on-time start and delay reasons

- Procedure and cancellation volumes

- Late finish counts and timing

Observations and process optimization were conducted across all roles, including

- Registration clerks

- Nurses (COVID swabbing, charge, pre-assessment, procedure, and recovery)

- Attendants (front, back, float)

- Endoscopists (staff and fellows)

- Anesthesia (staff and fellows)

- Porters

- X-ray technicians

- Patients types (same day, in-patients, and isolation patients)

Common pain points for both clinics revolved around staffing, scheduling, and repetitive tasks throughout the patient flow. The final findings and a list of recommendations for rapid improvement events were compiled and presented to Endoscopy Leadership. Many recommendations have been implemented post presentation and review, and others are in progress or scheduled, with a few remaining to be completed in the near future.

Scope Reprocessing Efficinecy Toronto Western Hospital (TWH) and Toronto General Hospital (TGH) have a high throughput of cases in their Endoscopy and Cystoscopy clinics, resulting in a high volume of endoscopes for reprocessing throughout the clinic day. Endoscope and cystoscope reprocessing is a core process supporting the Endoscopy and Cystoscopy Clinics. This process needs to be completed efficiently and in alignment with infection prevention and control standards.

Following the completion of a Lean Efficiencies project that focused on patient flow through the Endoscopy Clinic at both TGH and TWH, team members from Techna’s Surgility and Transformatics (Kelly Lane, Agata Misiura, Linda Eum, Helena Hyams) teams were engaged to conduct similar process analysis using Lean methods for endoscope and cystoscope reprocessing pathways.

The goal of the Scope Reprocessing Lean project was to evaluate endoscope and cystoscope reprocessing workflows at TGH and TWH using Lean analysis methods. Through literature review, shadowing, process mapping, and data analysis, the project aimed to identify opportunities to optimize endoscope and cystoscope reprocessing at TGH and TWH.

Objectives of the project included the identification of relevant metrics based on literature, the analysis of workflows, staffing, scope inventory, and physical space utilizing Lean methods, and the synthesis of the observations and findings into recommendations on areas of opportunity for efficiencies. The analysis and recommendations were in alignment with UHN POLICIES, CSA standards, and related infection prevention and control guidelines.

Areas of opportunities for both hospital sites were grouped into themes such as space and layout, education, visibility, maintenance, and tools. 22 opportunities were put forward, 8 for TGH and 14 for

Neurosurgery Navigation Evaluation

From October 2020 – March 2021, the Surgility team supported Medtronic engagement and gap assessment for the neurosurgery navigation fleet refresh. Working with Dr. Suneil Kalia and Dr. Ivan Radovanovic, the team observed the use of current navigation systems across procedures for deep brain stimulation (DBS) electrode placement for Parkinson’s, laser interstitial thermal therapy (LiTT) for epilepsy, and microsurgical aneurysm clipping.

The team generated process models for the procedures, highlighting tasks interacting with the system and current pain paints that new systems for consideration should address. Findings from the gap analysis and technology assessment were detailed in the RFP consideration list shared with the clinical team and Medical Engineering covering remote planning, data transfer and management, data and system interoperability, and digital subtraction angiography image merge. The team worked with Medtronic and Brainlab teams to review and test solutions for the gaps identified, detailing features and comparisons between the vendors shared with Medical Engineering for the RFP.

J & J Velys Navigation Trial

The Surgility team assisted with performing a technology review and assessment of different surgical planning tools, navigation, and robotic systems that can be used for hip, knee, and shoulder procedures. Several features and functionality were compared including pre-operative imaging, interoperability, intra-operative use, integration with PACS, and post-op analytics. The Johnson & Johnson (J&J) Depuy Velys Hip Navigation system was chosen to move forward for trial at Toronto Western Hospital with Dr. Michael Zywiel. The team supported J&J engagement for review of Velys system details and features, review of technical documentation, trial evaluation metrics, planning, and approval process. Medical Engineering and the representative from Johnson & Johnson inspected and setup the device at the beginning of March 2022 and it was then used by the surgical team for 3 months from March to May 2022. Many metrics, such as templating accuracy, operative time, and cup positioning were evaluated throughout the trial, along with change in workload for X-ray techs using the NASA task load index.

Medtronic Situate

To assess radiofrequency ID (RFID) tagged sponge detection technologies to support sponge counting and prevent never events, the Surgility team supported the Medtronic Situate trial across hepato-pancreato-biliary / general surgery, abdominal transplant, and gyne oncology open cases over November – December 2021, followed up by completion of user evaluations in January 2022. Over the trial duration, the system demonstrated benefits as an adjunct technology to support existing counting processes. Introduction of the RFID scanning process for the patient body prior to closing helped validate total counts, reconcile miscounts, and detect and locate sponges quickly with high staff satisfaction in ease of use and integration with existing processes. Post trial, the team helped worked with OR Supply Chain and Surgical Services across additional vendor engagements to compare and assess the Stryker SurgiCount+ and HaldorTech ORLocate solutions.

Fujifilm Synapse3D

The Surgility team supported application review and deployment for the Fujifilm Synapse3D imaging application for thoracic surgery planning, including setup and install on UHN servers and interfacing with PACS. The Synapse3D application enables segmentation of lung, pulmonary artery, pulmonary vein,

bronchi, and lung nodules to identify optimal paths leading to lung lesions and provide simulations for virtual bronchoscopy and resection. The application is currently being reviewed through the PReopativere IMaging Evaluation Survey with 3D reconstruction (PRIMES-3D) trial with Dr. Alexander Gregor and Dr. Kazuhiro Yasufuku to assess the added value of Synapse3D assisted 3D reconstruction and pre-operative review for anatomic lobectomy and segmentectomy.

Figure 1. Medtronic Situate allows detection of RFID-tagged sponges to supplement counting and locating sponges.

Augmented Reality (AR)

Augmented reality (AR) integrates virtual information with the physical environment, allowing high-fidelity experiences through visual and spatial feedback to improve spatial context and help manage complexity. On-site restrictions and the transition to more virtual learning due to the COVID-19 pandemic have also highlighted the value of AR based applications to support competency-based education and enhance learning content.

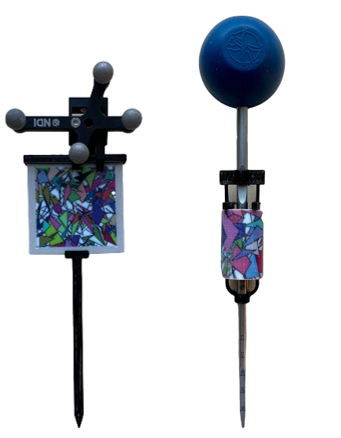

Over the pandemic, our engineering team worked to develop real-time tracking for use with AR guidance, first with gold standard stereo infrared (IR) optical and electromagnetic (EM) tracking systems, followed by tracking with web and mobile device cameras which allows for broader accessibility of use beyond specialized environments or tracking equipment. A server and client framework were also developed, allowing multiple client types (browsers, mobile devices, headsets) to simultaneously connect and communicate between each other (such as sharing spatial registration information and real-time positions of tracked tools). These developments enabled technology translation for more immersive AR applications when combined with 3D modelling and fabrication techniques.

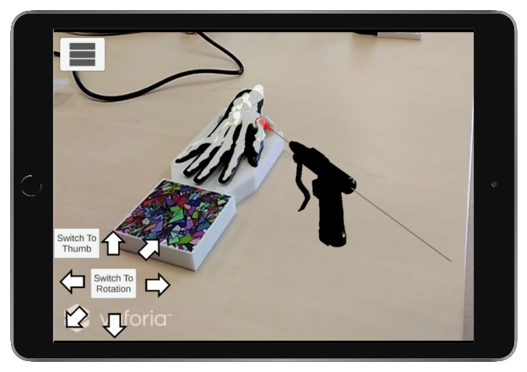

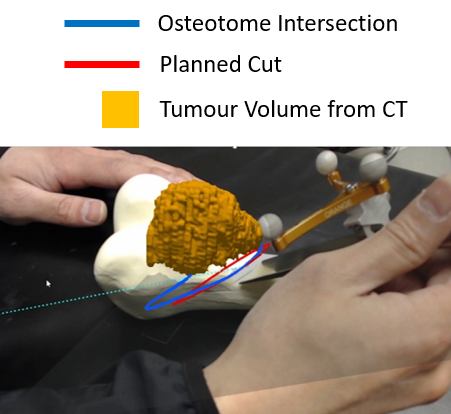

K-wire Fixation Simulator

In collaboration and oversight from Dr. Stefan Hofer, Dr. Blake Murphy, Dr. Joseph Catapano and Dr. Mark Shafarenko, a hand model was developed using reference CT and artificial breaks representative of Bennett’s fracture. The model includes 3D printed bone with silicone soft tissue and EM sensor embedment. Model design includes independent mobility of the thumb relative to rest of the hand, with integration of co-modality tracking through EM and mobile systems, enabling AR visualization and guidance with the simulator K-wire driver. The simulator application allows real-time visualization and feedback of k-wire advancement relative to the hand model and allows evaluation of performance via simulated oblique x-rays and comparisons with planned trajectories.

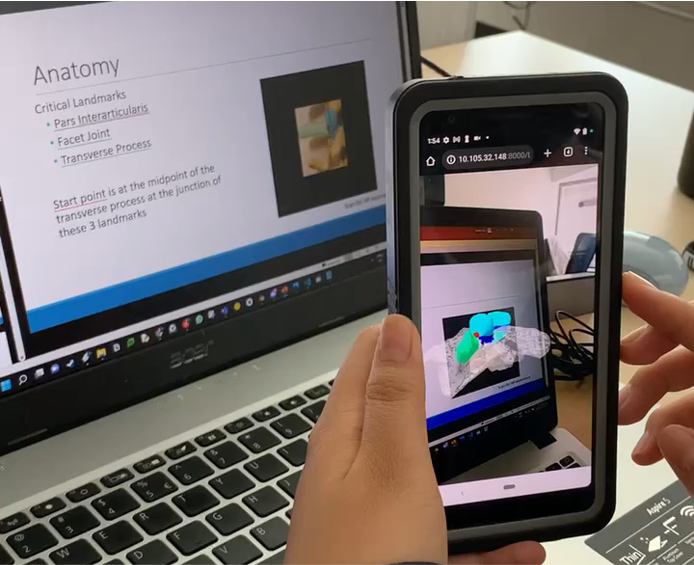

Pedicle Screw Placement Simulator

Working with Dr. Christopher Nielsen and Dr. Samuel Yoon, a 3D model of the L5, T10 and C5 segment were created from CT, allowing the model to be 3D printed with trackable image targets for use on table top. AR allows for virtual pedicle screw placements to be visualized in tandem with a full virtual spine and labeled anatomical regions over the physical model. A 3D printed stylus was also designed and calibrated, enabling learners to pick entry points and pedicle screw trajectories for evaluation of potential pedicle screw breach and distance / angular errors compared to optimal trajectories.

Elbow Arthroscopy Simulator

Elbow arthroscopy is a challenging procedure for learners due to limited educational exposure in residency, technical challenges with portal placements, and anatomical considerations such as narrow joint space and neurovascular anatomy. Working with Dr. Oren Zarnett, the Surgility team designed and developed virtual reality elbow arthroscopy modules to help provide better spatial context for learners, including the practice of portal placements for instruments and viewing, virtual arthroscopy views simulating 30-degree scopes to visualize anatomical structures in narrow joint spaces, and quantitative evaluation of spatial understanding of the procedure and anatomy. Soon, the simulator will be trial tested to gain valuable feedback on further optimizations to streamline elbow arthroscopy education.

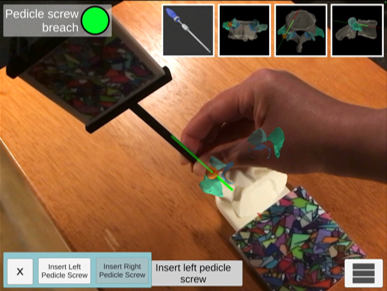

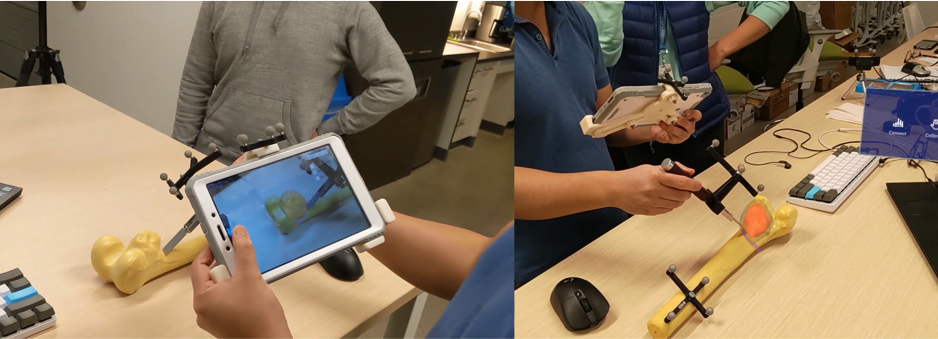

Tracking and Technical Assessments

Execution of guided osteotomies with sawbone femurs under AR guidance were performed, where resulting entry positions and cutting planes matched positions and orientations of planned cuts for the sarcoma model and previous results using traditional navigation and image guidance. Comparisons between tracking modalities showed image-based tracking using web or mobile device cameras presented a cost-effective solution through evaluations of a 243-point measurement phantom across a 16 x 16 x 18 cm volume. Results of the tracking performance and assessment were presented at the 2023 SPIE Advanced Biomedical and Clinical Diagnostic and Surgical Guidance Systems conference, demonstrating that tracking with low-cost monocular cameras can be suitable for conveying spatial context over a desktop working volume for learners.

Web-based AR

To incorporate and adopt AR into existing curriculum, web-based AR enables the benefit of enhanced spatial understanding through interactive 3D models accessible through mobile / laptop displays and cameras. Working with Dr. Christopher Nielsen and Dr. Samuel Yoon, a sawbone model of the spine was CT scanned and segmented to match anatomical regions highlighted in 2D images used in existing learning slides. Existing slide images were enhanced into AR image targets to load and display virtual 3D models when viewed with a mobile or web camera in the browser where learners can interact with the models and view animated procedural tasks in 3D.